"Fast Non-linear Model Predictive Control for unified trajectory optimization and tracking : Analysis with Inverted Pendulum and Cart Pole"

Underactuated Robotics Course - Final Project

Carnegie Mellon University, USA

Implemented the following paper published in 2016 from scratch and further successfully optimized the algorithm by making modifications on the same. The algorithm was tested in simulation with Cart Pole and Simple Pendulum dynamics.

ICRA 2016 paper - ETH Zurich

As a team of two, the algorithm was implemented in MATLAB and codes for the same can be found in GitHub

Both of us had separate implementations of the algorithms from a learning perspective and both can be found in this link.

Sequential Linear Quadratic Updates (SLQ)

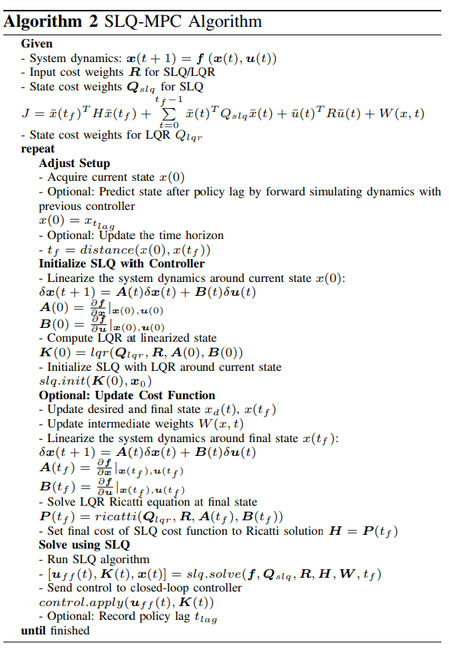

The SLQ algorithm which is used as the optimization method by MPC gives out an improved control policy by updating an initial nominal control input values that generate a trajectory (Shown as Blue in video) with feedback and feedforward terms to track the desired trajectory (Shown as Red).

The feed-forward terms and feedback terms are calculated by solving a Riccati equation backwards in time. The correct gains for the feed-forward term that further reduces the cost function is found by performing a simple line search. A snippet of the detailed algorithm from the paper is given below.

The video following the code shows our implementation on how SLQ improves the nominal control input policy to a policy that can reduce the error for an inverted pendulum in each iteration. As you can see that, it doesn't give input policy that can exactly track the trajectory, this is where MPC comes into play.

The best part of SLQ is that it does this entire process in less than 1 second (Used tic toc function in MATLAB to verify!)

SLQ with Waypoint tracking

It can also be made to reach a waypoint while tracking the trajectory - Its given as a soft constraint cleverly through cost function. This cost is added to the cost function. Cost function depends on how far we are from a way-point, waypoint weights and an error function dealing with the time allowed to reach the waypoint.

A video of how the SLQ improves its control policy in each iteration to reach the waypoint while parallely trying to align with the desired trajectory is shown below.

Model Predictive Control with SLQ optimization

For analyzing the algorithm, the problem we took up was, to swing-up a pendulum to its vertical position from rest to track a particular trajectory that has 200 steps (200 input values and 201 states if we consider them as way-points). With a time horizon of 5 steps receding horizon, the SLQ returns a control policy for the 5 steps, the first step alone is taken using the first control input value returned by the SLQ algorithm. Similarly, this process is repeated for 200 steps.

A snippet from the paper of MPC algorithm and how it integrates with the SLQ is given below. And later, a video of our implementation on inverted pendulum is attached too.

Comparison

1. Removed feedforward term in SLQ and solved the MPC - Takes less time as there is no line search happening without feed-forward happening, but error is more as compared to SLQ.

2. Used an iLQR instead of SLQ for optimization within MPC - Least error but this takes the highest time.

Observation - The ILQR optimization in MPC tracks the trajectory slightly better than SLQ based on error, but takes 38-40 seconds while the SLQ takes only 1 - 1.5 seconds to track the trajectory for the problem we chose.

iLQR-MPC Video x4 Speed

RESULTS AND OBSERVATION

1. The SLQ can plan within 1-2 seconds for this system, which justifies the fact that its a Fast MPC, very fast as compared to other techniques.

2. This means that in-between its tracking, if you give a new trajectory, it can replan very quickly and track that on the fly!

3. Super useful strategy for Real-time control in trajectory tracking and optimization